AMD and Xilinx have been working together on a system for the acceleration of AI processing. The pair, who have a long history of collaboration, have an achievement to crow about as at the Xilinx Developer Forum in San Jose, California on Tuesday it was announced that a system combining AMD Epyc CPUs and Xilinx FPGAs had achieved a new world record for AI inference.

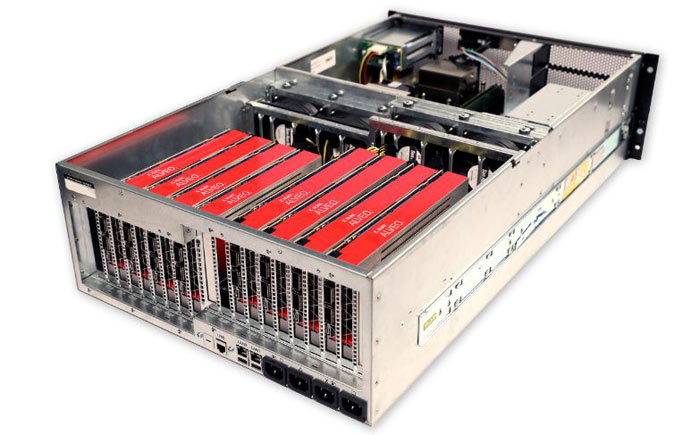

Xilinx CEO, Victor Peng, was joined on stage by AMD CTO Mark Papermaster to reveal the achievement. It was boasted that a system leveraging two AMD EPYC 7551 server CPUs and eight of the newly-announced Xilinx Alveo U250 acceleration cards had scored 30K images/sec on GoogLeNet, a widely used convolutional neural network.

As part of the respective companies’ vision regarding heterogeneous system architecture, the parties have worked to optimise drivers and tune the performance for interoperability between AMD Epyc CPUs with Xilinx FPGAs. In its press release on the collaborative tech, Xilinx says that the inference performance is “powered by Xilinx ML Suite, which allows developers to optimize and deploy accelerated inference and supports numerous machine learning frameworks such as TensorFlow”. Xilinx and AMD also work closely with the CCIX Consortium on cache coherent interconnects for accelerators.

In the wake of the above announcement we can expect more similar technological achievements to come from this pair. Xilinx says that there is a strong alignment between the AMD roadmap for high-performance AMD Epyc server and graphics processors, with its own acceleration platforms across Alveo accelerator cards, as well as the upcoming TSMC 7nm Versal portfolio.