ATI produce tool to increase Doom3 scores 'up to 35%' with AA enabled

ATI Technologies have this morning made a tool available to HEXUS which supposedly improves scores in most OpenGL games when antialiasing is enabled at high resolution. Improvements 'of up to 35%' in Doom3 are explicitly mentioned by sources within ATI. The tool seemingly changes the way the graphics card maps and accesses board memory to better deal with handling AA sample data.As well as supporting Doom3, the tool is said to increase performance in all OpenGL titles when using antialiasing. With Doom3 the poster child for that graphics API, ATI's willingness to promote the increases in that application in particular are understandable.

The fix will shortly be rolled into CATALYST 5.11 according to ATI sources and a beta drop of that driver will be made available for testing in due course, before the final WHQL driver from Terry Makedon's CATALYST team is made available for public download in November.

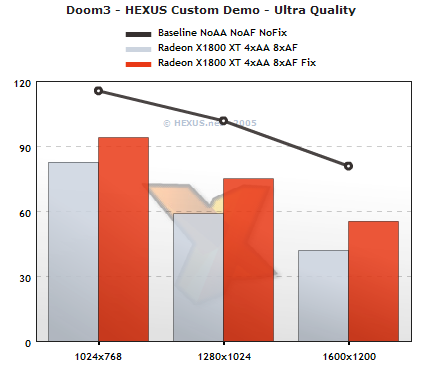

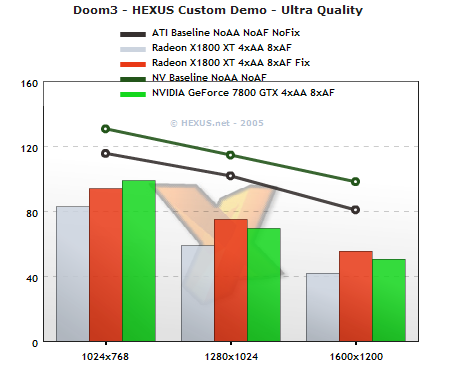

Doom3 Performance

On an Athlon 64 FX-57 system (ASUS A8N-SLI, 1GiB DDR400, Radeon X1800 XT 625/750, GeForce 7800 GTX 430/600), the tool shows the following increase in Doom3 scores using HEXUS's own custom demo. Timedemo 1 built in to the game shows similar gains and the HEXUS results show a 32% performance boost at 1600x1200.

Adding in results for NVIDIA GeForce 7800 GTX running the 81.84 driver show that with the memory controller tweak an ATI product can overtake NVIDIA's flagship single-board SKU for the first time since Doom3's release. The significance of that won't be lost on a great many people reading this.

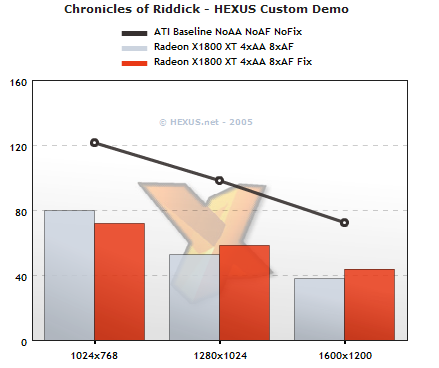

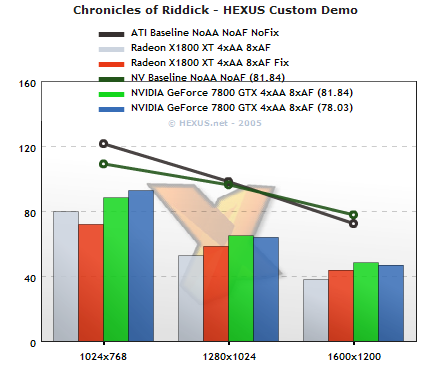

Chronicles of Riddick

The other popular OpenGL-based first person shooter, Starbreeze's Chronicles of Riddick, also shows gains on the X1800 XT but only to the tune of 16% (although not insignificant) at 1600x1200 with antialiasing on.

NVIDIA with their latest driver also have gains in Riddick's performance, the blue bar on the following graph showing that the GeForce 7800 GTX is slower with that driver at 1280x1024 and 1600x1200, both with antialiasing on.

The tweak to the X1800 XT memory controller, seemingly optimising ROP access for Z compare at high resolution, isn't enough to take the ATI hardware past NVIDIA's fastest, although the gap certainly closes.

Summary

The tweak also shows gains similar to Riddick's in the Serious Sam 2 demo using the OpenGL version of Croteam's renderer, so OpenGL performance is generally up quite healthily across the board.ATI's Eric Demers had this to say about the changes earlier on Beyond3D:

Our new MC has a view of all the requests for all the clients over time. The "longer" the time view, the greater the latency the clients see but the higher the BW is (due to more efficient requests re-ordering). The MC also looks at the DRAM activity and settings, and since it can "look" into the future for all clients, it can be told different algorithms and parameters to help it decide how to best make use of the available BW.

As well, the MC gets direct feedback from all clients as to their "urgency" level (which refers to different things for different client, but, simplifying, tells the MC how well they are doing and how much they need their data back), and adjusts things dynamically (following programmed algorithms) to deal with this. Get feedback from the DRAM interface to see how well it's doing too.

We are able to download new parameters and new programs to tell the MC how to service the requests, which clients's urgency is more important, basically how to arbitrate between over 50 clients to the dram requests. The amount of programming available is very high, and it will take us some time to tune things.

In fact, we can see that per application (or groups of applications), we might want different algorithms and parameters. We can change these all in the driver updates. The idea is that we, generally, want to maximize BW from the DRAM and maximize shader usage. If we find an app that does not do that, we can change things. You can imagine that AA, for example, changes significantly the pattern of access and the type of requests that the different clients make (for example, Z requests jump up drastically, so do rops). We need to re-tune for different configs. In this case, the OGL was just not tuning AA performance well at all. We did a simple fix (it's just a registry change) to improve this significantly. In future drivers, we will do a much more proper job.

Overall the programmable nature of the new memory controller in the majority of ATI's new graphics chips means that over time and with enough analysis, per-application changes can be made to the memory controller to optimise memory access by any given application at any number of resolutions, allowing ATI to extract the most out of their hardware.

The largest single functional block on the new GPU silicon, the memory controller - chiefly architected by ATI's Director of Technology, Joe Macri - is one of the two large keys to unlocking the performance of the new hardware. Future driver releases from ATI may show other large gains in other games, not just in OpenGL.

The memory controller on NVIDIA's latest GPUs is also programmable to some extent by the driver, to tweak certain parameters pertaining to memory access, although it remains to be seen if they have similar ranges of performance headroom to find in their own products.

Just goes to show hardware is nothing without good software. Driver development and release schedules just got interesting again.