8K and Creator Analysis

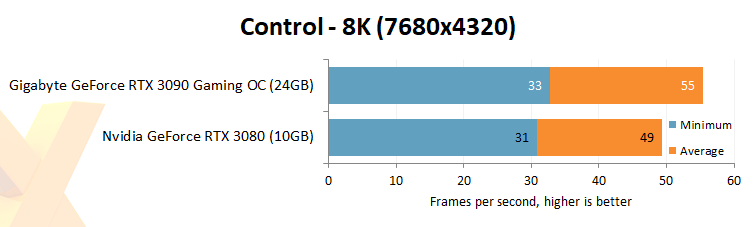

Nvidia promotes the RTX 3090 as the first true 8K gaming card. The proviso is that games take advantage of framerate-boosting DLSS technology and settings are knocked down from absolute maximum to, shall we say, decent. Tested this way, with DLSS set to 1440p (9x scaling factor from version 2.1), ray tracing to high, and internal resolution to 8K (via implementing DSR to mimic an 8K screen on a 4K panel), framerates are decent for both 30-series cards. The RTX 3090's performance improvement is agnostic of framebuffer, and it's the ability to scale so highly on DLSS that helps keep framerates healthy.

Increasing the DLSS to 2880p whilst keeping everything the same reduces the RTX 3090's framerates to 37/23fps and removing it altogether and running 4x MSAA makes the game crawl along at sub-10fps. One needs the latest iteration of DLSS when running raytracing at a pseudo 8K.

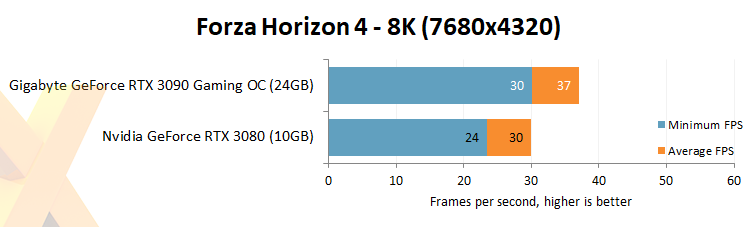

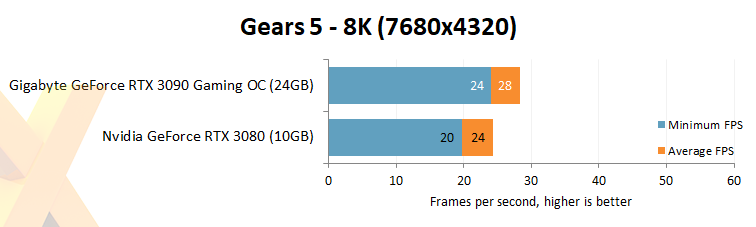

We can further examine the prowess by looking at how well the RTX 3090 and RTX 3080 are able to handle an extreme 8K load by running Forza Horizon 4 and Gears 5 at the higher resolution (8K run on a 4K screen via DSR) with the same image quality settings you see on pages nine and 10. There's no raytracing or DLSS at play here.

Sure, one could lower the settings to achieve 8K60, but these are the best gaming graphics cards in the world. As such, performance is reduced drastically compared to 4K.

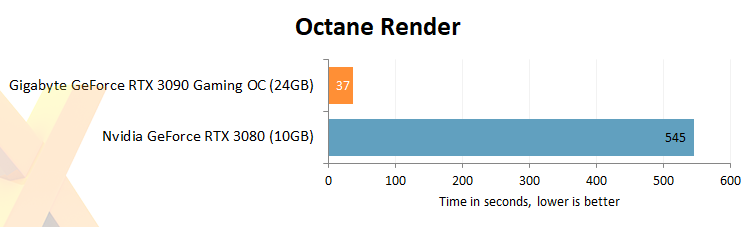

We believe it's a mistake for Nvidia to call this a GeForce card. It's not built primarily for gaming, even though it does well in that regard. The RTX 3090, instead, is a far greater boon for content creators who need to render from large datasets that don't fit into the VRAM of other, less powerful cards.

The Octane Render is popular in the content creator industry. It's absolutely worth shelling out extra cash for hardware that can reduce render times massively. The above graph takes a corner case as an example. The large project has approximately 14GB of data which conveniently exceeds the framebuffer of everything other than last-gen RTX Titan and this RTX 3090, with both featuring 24GB.

VRAM-exceeding projects can still be completed using the out-of-core rendering by having the excess data held in system memory rather than VRAM, and this is how the 10GB-equipped RTX 3080 runs this particular workload.

The results are startling. RTX 3080 takes over nine minutes to run through the dataset, flitting between VRAM and system RAM as and when needed. The RTX 3090, meanwhile, holds the entire dataset in memory, thereby smashing through the render in just 37 seconds, or almost 15x as quickly. Here is where the extra VRAM can come in genuinely useful, and not in gaming. Of course, this is a best-case scenario for the RTX 3090; projects that are, say, 8GB, will render only 20 percent more quickly on the range-topping card as both RTXs are able to keep everything in board memory.

This model, therefore, ought to be named the RTX Titan (Ampere). Creators need to understand their workflow restrictions and evaluate if RTX Titan 3090 can help in chopping render time to completion. Time is money, after all.