Nvidia boss, Jen-Hsun Huang, kicked off Nvidia's Gamescom conference by stating that "every rumour about the company's next-gen graphics card is wrong". Interesting… (But just a joke, it would turn out). He then went on to define the holy grail of graphics - raytracing - where light-rays are calculated by how they bounce off surfaces. Raytracing is important insofar as it is a far better proxy than classic rasterisation for how natural light is perceived by the human eye.

With enough light-rays bouncing around - tens of millions for a simple image, actually - a recursive algorithm could generate full-screen, high-resolution images in real time, at high frame-rates, if the GPU horsepower is big enough.

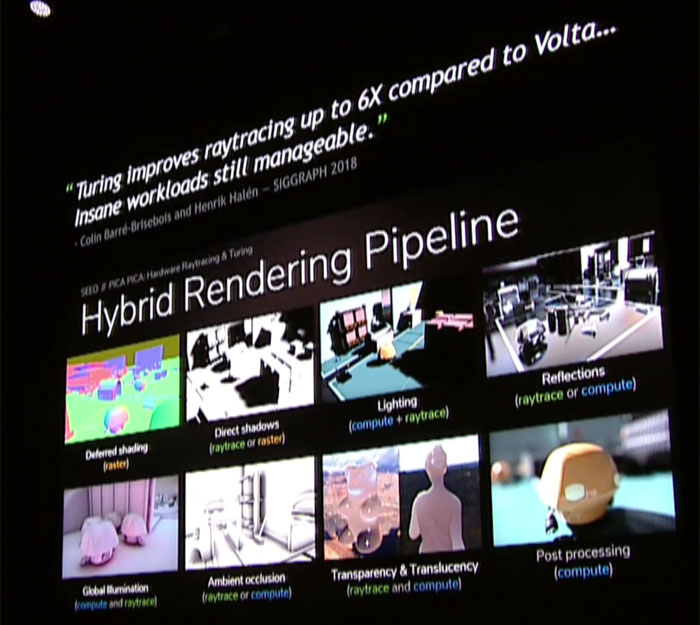

Raytracing is a known technology. However, getting to full-screen, high-speed, real-time raytracing ought to take 10 more years, according to Jen-Hsun. Speeding-up this process, he said, is Nvidia's RTX technology, which combines traditional rasterisation and super-occluded raytracing for hybrid rendering, taking the best bits of both. This is the technology that underpins the new RTX 2000-series.

You will already known about Nvidia's DXR, and it's this software-side tech that enables the dual-render RTX 2000-series to push back the boundries of what's possible from an image quality point of view, in real time. Remember the Stars Wars raytracing demo at GDC running on a quad-GPU $68,000 DXG machine?

However, at a demo on stage, Huang reckoned the Turning architecture, powering RTX chips and weighing in at up to 18.9bn transistors, can now power the same demo at a faster rate when using a single card - at about 22fps compared to 18fps.

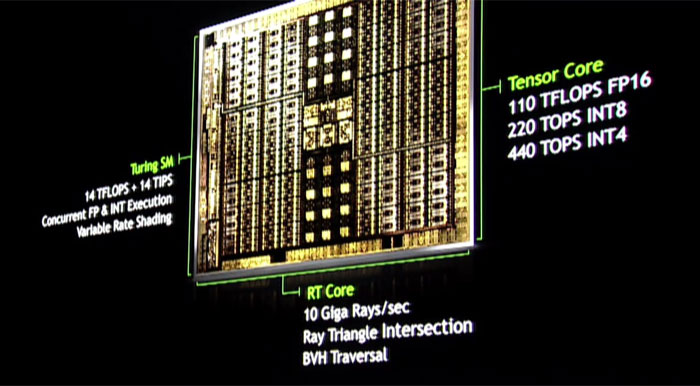

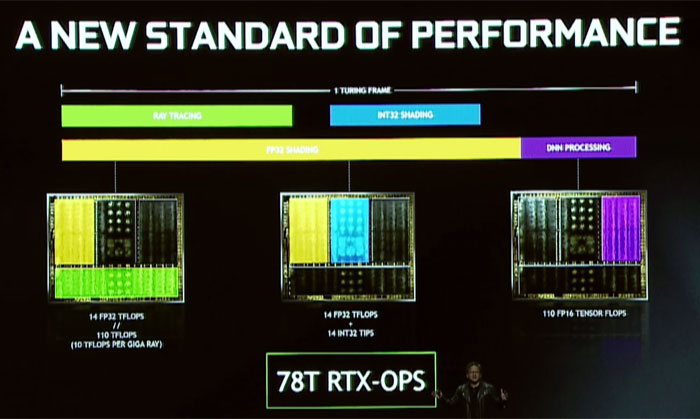

Offering up to 14TFLOPS of single-precision compute, variable-rate rendering (for foveated VR). A new core, called the RT Core, can handle 8x more rays per second. Add to this Nvidia's "completely brand new" reworked SM cores. Lastly, the Tensor Core provides foundations for deep learning applied to various image optimising operations that require this intelligence (see one example, DLSS, below).

Point is, he said, getting great image quality will be more than just rasterisation. You will want raytracing (RT core), rasterisation (SM core), a crazy-efficient occlusion engine, and DNN processing for the AI to generate 'missing pixels' for higher-resolution gaming (Tensor core). It's potentially three cores working together, not one.

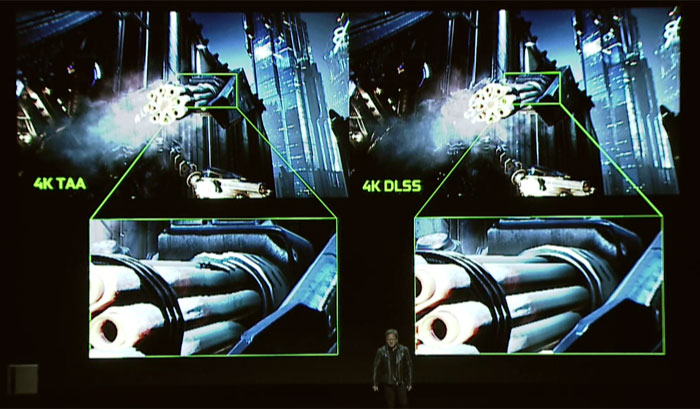

Temporal Anti Aliasing (TAA) vs Deep Learning Super Sampling (DLSS) in the Epic Infiltrator demo at 4K

As an example, Huang showed off the Infiltrator demo, based on the UE4 engine, running at 78fps at 4K, instead of 38fps on a GeForce 1080 Ti. The main reason for the increase is due to the Tensor cores filling in the resolution through neural network training.

Rayracing comes into its own when lighting is hard - shadows being a classic example. There's no need for hacks such as contact hardening, so expect to see raytracing used on Turing for creating lifelike shadows that are based on physically-correct rendering.

The sum of the calculation performance on Turing - 14TFLOPS on rasterisation and raytracing, and FP16 on the Tensor cores - leads to a weighted average of about 78 RTX TFLOPS. That's a new metric.

Using different parts of the chip for eclectic tasks is a new way to do graphics. Of course, games will have to take advantage of the architecture and RTX platform and implement it in forthcoming titles. You would expect the Unreal Engine will do so, but time will tell just how successful it will be.

We come away with the feeling that Nvidia is creating hardware and software that uses multiple types of cores for best-in-class, high-quality rendering. Lighting, in particular, appears to be a poster-child for incredibly lifelike lighting.

|

Price |

$1,199 / £1,099 |

$799 / £749 |

$599 / £569 |

|

CUDA Cores |

4,352 |

2,944 |

2,304 |

|

Boost Clock |

1,635MHz (OC) |

1,800MHz (OC) |

1,710MHz(OC) |

|

Base Clock |

1,350MHz |

1,515MHz |

1,410MHz |

|

Memory |

11GB GDDR6 |

8GB GDDR6 |

8GB GDDR6 |

|

USB Type-C and VirtualLink |

Yes |

Yes |

Yes |

|

Maximum Resolution |

7680x4320 |

7680x4320 |

7680x4320 |

|

Connectors |

DisplayPort, HDMI, USB Type-C |

DisplayPort, HDMI, USB Type-C |

DisplayPort, HDMI, USB Type-C |

|

Graphics Card Power |

260W |

225W |

175W |

|

Ordering info |

Pre-order now, ships 20th Sept |

Pre-order now, ships 20th Sept |

Sign up for notifications |