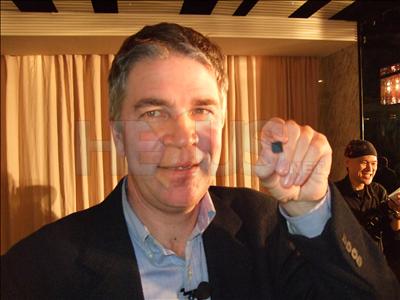

At a COMPUTEX press conference earlier today, NVIDIA's Michael Rayfield was on hand to unveil the manufacturer's Tegra family of processors.

Dubbed a computer-on-a-chip, Tegra features an ARM11 processor, a HD video processor and an ultra low-power GeForce GPU. The chip measures one tenth the size of Intel's Menlow platform, and NVIDIA had no shortage of various bold claims.

Tegra will provide a whopping battery life of 26 hours when playing 720p video, compared to 1 hour when using Intel's Atom chip, says NVIDIA.

The Tegra series, which builds upon NVIDIA's early '08 processor, the APX 2500, will launch in two SKUs; the Tegra 650 and Tegra 600.

Both chips feature an ARM11 processor, clocked at 800MHz and 700MHz, respectively. Whilst both chips will playback 720p without kicking up a fuss, the Tegra 650 will also manage 1080p H.264 footage. Perhaps even more impressive, is the fact that NVIDIA showed a Tegra-based MID playing back 720p video, whilst consuming under 1Watt of power.

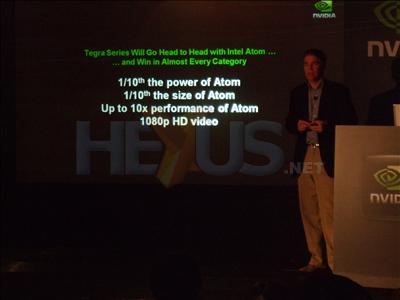

So then, when comparing to Intel's Atom, NVIDIA tells us that Tegra's battery life is better, it uses less power, it's much smaller and it'll playback HD video without problem. An all-round winner, right?

Well, maybe not. Tegra might seem incredible at a first glance, and the live product demonstrations on show were impressive - the 720p playback really was flawless. Nonetheless, NVIDIA's marketing Tegra as an Atom-beater. We, however, feel that Atom isn't the direct competitor. Atom can be found in mobile devices such as MIDs and UMPCs, but it lives in laptops too. Tegra, it appears, is limited to just mobile devices - for now, at least.

A better resemblance, in fact, might be made to older chips such as AMD's all-in-one media processor, the Imageon, or indeed Intel's 2007 system-on-a-chip (SoC), the CE 2110.

The computer-on-a-chip isn't an entirely new formula, and although NVIDIA's HD-ability benefits from an onboard HD processor, the ARM11 CPU could be the weak link. ARM11, a 32-bit RISC processor, is widely used in mobile devices, but suffers from being incompatible with x86 software. Intel's x86 Atom, doesn't face such an issue and can run PC software without the need to recompile code.

Despite our concerns, we're quietly excited about Tegra. NVIDIA states that the design has been built from the ground up, as opposed to taking existing architecture and shrinking it down. It claims, therefore, that Tegra will improve in performance year after year, whilst retaining its tiny power envelope.

Though Tegra prices haven't yet been announced, NVIDIA states that we'll be seeing Tegra-based devices from various partners later this year. We'll be keeping a close eye on those, but in the meantime, here's the complete Tegra specification:

| NVIDIA Tegra 650 | NVIDIA Tegra 600 | |

|---|---|---|

| Processor and Memory Subsystem | ARM11 MPCore @ 800Mhz 16/32-bit LP-DDR NAND Flash support |

ARM11 MPCore @ 700Mhz 16/32-bit LP-DDR NAND Flash support |

| HD AVP (High Definition Audio Video Processor) | 1080p H.264 decode 720p H.264 encode Supports multi-standard audio formats, including AAC, AMR, WMA and MP3 JPEG encode and decode acceleration |

720p H.264 and VC-1/WMV9 decode 720p H.264 encode Supports multi-standard audio formats, including AAC, AMR, WMA and MP3 JPEG encode and decode acceleration |

| ULP (Ultra Low Power) GeForce | OpenGL ES 2.0 Programmable pixel shader Programmable vertex and lighting Advanced 2D/3D graphics |

OpenGL ES 2.0 Programmable pixel shader Programmable vertex and lighting Advanced 2D/3D graphics |

| NVIDIA nPower Technology | Low-power design delivers over 130 hours audio and 30 hours HD video playback |

Low-power design delivers over 100 hours audio and 10 hours HD video playback |

| Imaging | Up to 12 megapixel camera sensor support Integrated ISP Advanced imaging features |

Up to 12 megapixel camera sensor support Integrated ISP Advanced imaging features |

| Display Subsystem | True dual-dispolay support Maximum display resolutions supports: - 1080p (1920x1080) HDMI 1.3 - WSXGA+ (1680x1050) LCD - SXGA (1280x1024) CRT - NTSC/PAL TV output |

True dual-dispolay support Maximum display resolutions supports: - 720p (1280x720) HDMI 1.3 - SXGA (1280x1024) LCD - SXGA (1280x1024) CRT - NTSC/PAL TV output |