Tech background and appearance

A quick recap on GeForce 8600 GT first.

The NVIDIA chopping machine has been dusted down and brought into action. Its job was to take GeForce 8800 as a technology base and pare it down to meet a certain price point whilst keeping all the DX10 gubbins intact.

In terms of pure performance the chopper has been busier than we'd like, really. Unified scalar shader units are down to 32 from the range-topping 128. The memory bus is down from 384-bit to a meagre 128-bit, and ROPs have been harvested from 24 to 8. Sounds all bad, right?

There is some good news with the fact that the texturing addressing potential of G84 (GeForce 8600 GT/GTS) has been doubled, per texture processor, when compared to G80. That makes the G84 design, on paper, somewhere around one-third of GeForce 8800 GTX, conveniently ignoring frequencies.

The later introduction of G84 also sees a process shrink to 80nm, and NVIDIA bolsters the multimedia performance of the SKU by introducing its Bitstream and VP2 processors, which aids in the usually CPU-intensive (and expensive) HD decoding task by providing hardware-assisted acceleration for all stages of the H.264 codec (BSP) decode and all but the initial bitstream processing/entropy stage for VC-1.

The GTS and GT models differ in a couple of areas. The GTS, obviously, is clocked higher on all three significant clock domains. Secondly, the GTS carries obligatory support for dual-link HDCP (via an external ASIC), whereas it's an AIB partner option for the GT model, and we don't see too many partners taking it up, unfortunately.

You lose a chunk of performance when moving on down from G80 to G84, naturally, but you gain some extra features that even the mighty GeForce 8800 Ultra is bereft of.

Standard GeForce 8600 GT cards are clocked in at 540MHz core, 1190MHz shaders, and 1400MHz (effective) GDDR3 memory. XFX lists such a model but also has a XXX Edition, with clocks boosted to 620MHz, 1355MHz, and 1600MHz for c/s/m, respectively. That's around 15 per cent on all key parameters, which is nice.

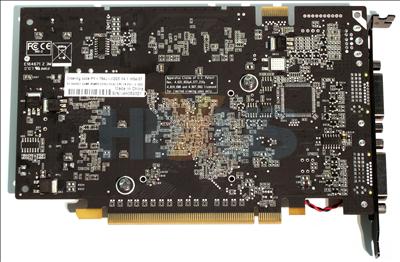

The card is equipped with 256MiB of Qimonda 1.4ns GDDR3 memory and it's left uncovered. It doesn't run particularly warm and RAMsinks, if installed, would serve more of an aesthetic purpose.

The XFX GeForce 8600 GT XXX packs in 289m transistors on an 80nm process. Power draw is reckoned to be around 41W on a default-clocked model and we don't imagine it to be much higher for XFX's card. That's why the GPU is cooled by a small heatsink/fan combination. We found it to be quite noisy in both 2D and 3D environments, suggesting that it wasn't thermostatically controlled.

Carrying on the power theme, the usual 6-pin PCIe power connector is conspicuous by its absence.

The rear houses a couple of dual-link DVI ports but neither are HDCP-enabled. Adding in the additional hardware required - HDCP key storage ASIC, transmitter, etc - would have raised the cost. Our view, however, is that all modern GPUs should be HDCP compliant, preferably with full integration into the core, a la AMD's Radeon HD 2000 series.

The DIN socket is for video-out only, by the way.

Summary

XFX has boosted standard GeForce 8600 GT frequencies by around 15 per cent on its XXX model. No HDCP support may be problematic for HD-DVD/Blu-ray playback, especially considering the extra codec-decoding properties present in this SKU.Current pricing is around the £110 mark, or nearly £30 more expensive than the cheapest stock-clocked models. That pricing also brings various excellent DX9 graphics cards into the equation, such as the Radeon X1950 Pro and GeForce 7900 GS.