Where's MIC?

Intel chief technology officer Justin Rattner's final-day IDF 2011 keynote address once again delved into the future of computing for the chip company. This time around, he spoke about the evolution of multi- and many-core computing.

Touching on the 22nm-based Knights Corner first, which uses a many-integrated-core (MIC) chip architecture, Rattner described that advances in software parallelism have encouraged Intel to continue pursuing the development of many-core processors. He cited general throughput speed-ups of at least 30x on a 64-core chip, while mentioning that coding for these MICs is becoming more pervasive.

"Our MIC chips use the IA architecture to the fullest extent," Rattner said, meaning that a coder working on a multi-core Xeon can transition to many-core computing seamlessly.

Need for more cores

Evangelising MICs further, Rattner demonstrated practical applications of these many-core processors, citing increases in performance for cloud computing; potential for multi-core-driven web app development via parallelisable JavaScript; using multi-core chips' vector engines (AVX) as digital processors for an LTE base station, and using the computational power to increase security on the PC.

While there's clearly an eclectic range of applications and needs for multi- and many-core computing, Rattner seemed at pains to justify Intel's continuing investment in moving more cores - CPU and GPU - on to upcoming silicon.

Moving forward - energy efficiency is key

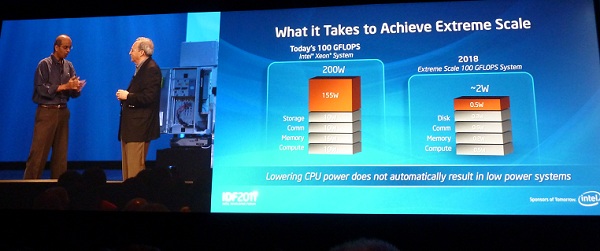

So what lies ahead for many-core computing? "We want to improve energy efficiency by 300x in 10 years," Rattner said. Putting some numbers into this extreme scale project, Intel reckons that it takes an Intel Xeon system consuming 200W to push out 100 GFLOPS of compute power. By 2018, Intel wants the same performance availed through a system using just 2W.

Making this bold claim possible is a new technology called near-threshold voltage. Right now, transistors - the building blocks of chips - are driven by an input voltage that's actually significantly higher than what's needed. The reason for this is down to just how granular the incoming supply is. And right now it's not.

New technologies needed

Near-threshold voltage (NTV) usage requires just enough power to switch the transistor. Being close to this on/off threshold increases peak efficiency, according to Rattner, and enough to provide a 5x energy-efficiency improvement over the status quo. Of course, Intel needs to work on providing such fine-grained input voltage.

Illustrating the pragmatic advantages of NTV is the solar-powered experiment shown earlier this week. Explaining it further, Rattner said that, thanks to some NTV trickery, the Pentium-class 'Claremont' chip uses less power when active than an Atom CPU in standby mode.

But it will require a holistic approach to reduce system power-draw. Intel also demoed some mega-bandwidth Hybrid Memory Cube - which is RAM that's stacked in layers on top of one another - reckoned to be good for 1TB/s transfers while consuming just eight watts. Scale it down and the sub-1W realm becomes possible.

Summary

As a company that's defined by silicon prowess, Intel's banking on scaling its IA architecture down with respect to manufacturing process and, through a range of new technologies, power. It's well-reported that it's had a difficult time in coaxing low operational and standby voltages from IA, so we're intrigued to see just how well it can marry the competing interests of multi-core computing and devilishly low power-draw.