It's all about running it on those cores, baby

Software-driven powerLet's think about this for a second. Intel is using a complete x86 CPU architecture, albeit with necessary bolt-ons such as the 16-wide ALU unit, to run graphics. What that really means is that rendering takes place in software. Yup, you've read that right: Larrabee uses massive parallel power to software-run code.

So, after input data has been provided, vertex shading; geometry shading; primitive setup; rasterisation; pixel shading; and blending - the step-by-step building blocks of a modern GPU - are all done in software in Larrabee, rather than, in the main, shading, as it is on current NVIDIA or ATI cards.

Intel reckons that this makes Larrabee fully programmable and far more suited to future workloads, but such an approach comes at the inevitable cost of having developers natively code for Larrabee using a C/C++ API. The 'problem' is somewhat mitigated by the fact that coding shouldn't be too different than writing for x86, which, after all, is what Larrabee is based upon.

Being software-based has other intrinsic advantages too, such as driver-updating for newer APIs when they become available. Kind of like adding microcode for your CPU.

DirectX and OpenGL will be supported, of course, and the traditional rendering pipeline can be run through software, but it's not how Larrabee talks best.

Bin it

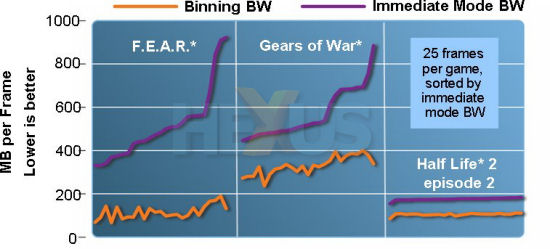

No matter how good a VPU-orientated architecture is internally, having lots and lots of usable memory bandwidth is, and always will be, important. We don't know the speed of interface-width of real-word Larrabee, but Sieler did divulge that the architecture uses what Intel terms a binning approach, which saves bandwidth compared to the immediate mode currently employed by GPUs.

Binning is analogous to the tiling approach used by older GPUs, made popular by PowerVR. Sieler explained that a scene is broken down into tiles, say a 128x128 tile for 32-bit depth and colour. The primitives for this tile are then calculated and stored in 'bins' in the external memory. Once required, the bins are emptied and the screen drawn, saving on unnecessary rendering by simply not drawing what you won't see.

Scaling

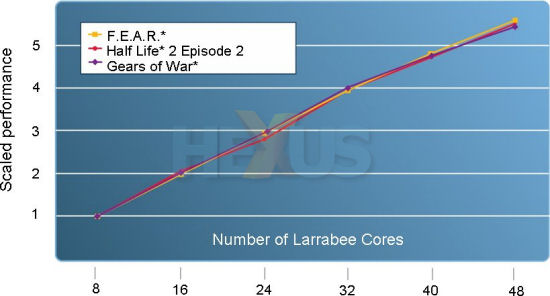

It's absolutely imperative that Larrabee's performance scales as core counts increase, and Intel is adamant that this is the case, by trotting out the graphic below.

Through several optimisations, including breaking down certain games' engines large vertex buffers - FEAR, for example - into manageable chunks, Intel reckons that there's a near-linear increase in performance as more and more cores are added to the mix.

How is this possible, you might ask? The reasoning, we suppose, lies with the software-driven approach of the rendering - you don't need to add in a commensurate number of ROPs and texture units: the cores (and dedicated logic) do it all.

What we don't know

Intel remains tight-lipped on just how many cores various iterations of Larrabee will ship with. We don't know exactly when it will come to market. How will software-based rendering actually pan out from an efficiency point of view? What about speeds, memory sizes, caches, power requirements?

What will NVIDIA and ATI have in response? What we do know is that Larrabee is as real as a hangover, but who will be suffering it next year: NVIDIA, ATI, or Intel?