Performance - Main test

All the boards stacked up

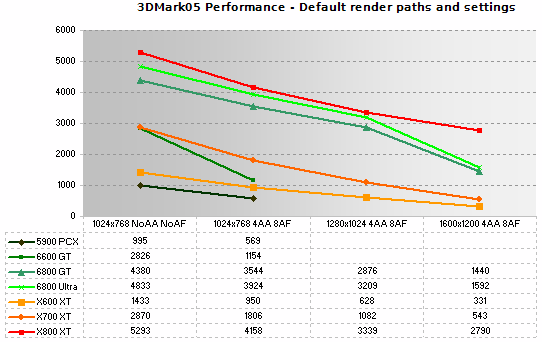

I tested at 1024x768 with no IQ settings enabled, giving the base benchmark score you'd get from the free download version. Then I tested at 1024x768, 1280x1024 and 1600x1200, all with 4x multisample anti-aliasing and 8x anisotropic filtering. The idea was to see just how far from being playable at each resolution we are with current hardware, using the benchmark as Futuremark intended to predict performance in future games titles. If we take 5000 marks as being the 'playable' figure, we can figure out just what resolution suits what hardware, and whether you can get away with enabling IQ options too.After all, 5000 marks in previous versions of 3DMark has been a rough indicator of decent performance in titles that make use of the DirectX version and render features that 3DMark implemented at that time. So we'll use that yard stick here, just for giggles.

3DMark05 Performance

The two NVIDIA boards with 128MB of card memory couldn't complete the tests at anything other than the first two initial settings, using 66.29 ForceWare, due to an out of memory error (D3DERR_OUTOFVIDEOMEMORY for the curious). Apart from that, all cards ran the test with nary a problem or major rendering issue.

In terms of our forward-looking prediction and 5000 point mark, only Radeon X800 XT managed to break that barrier. And that was at the most basic of default settings too. Interestingly, dropping down to 800x600 for a quick test (something that doesn't look too bad on my 1600x1200 LCD due to pixel scaling) showed the 6800 GT and Ultra willing to break 5000 marks too. But even in next generation games titles, are you going to be willing to drop to 800x600 with your £300 graphics card, on your most likely 1280x1024 LCD if current buying trends are anything to go by?

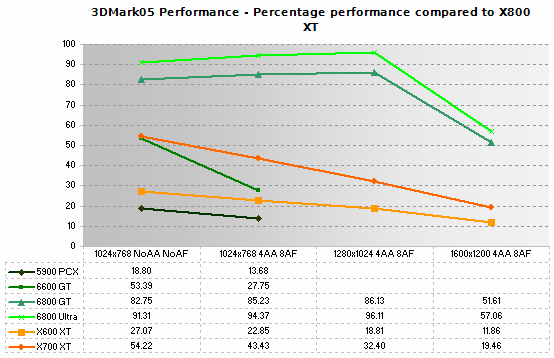

Let's plot the same numbers, but have X800 XT as our 100% baseline figure, checking how close the other cards get.

3DMark05 - Performance Scaled to 100% of X800 XT

6800 Ultra stays within 10% of the X800 XT until we get to 1600x1200, where performance falls off sharply to around 50% of the X800 XT. 6800 GT mirrors that trend, with its clock speed differences to Ultra making performance just a touch less.

6600 GT and X700 XT are initially at 50% of the performance of the 500MHz X800 XT, which, for pretty much 50% of the raw pixel pushing power and 50% of the memory bandwidth, is nice to see. However, 6600 GT's performance drops off much faster at the 1024x768 with IQ setting, compared to X700 XT's less severe fall-off.

5900 PCX is dismal throughout.

X600 XT doesn't really fare much better. It's a card for the here and now, but at 10% of X800 XT's performance at 1600x1200 with IQ enabled and never more than ~30% at any time, it's not really a forward-looking card for the future, as 3DMark05 predicts at least.

Is that prediction a good one?

If there's one thing that defines 3DMark05's performance, it's that it's heavily geometry limited in a couple of ways. With over 1M vertices per scene most of the time, that's a whole lot of big (which seems to be the key) vertex buffer objects to be locked in memory and pushed over the graphics interconnect bus to the card, as geometry changes during the game tests.Secondly, when those buffers get to the card, the IHVs are seeing usage patterns that put the buffers all over card memory, not just in one place. So the cards become vertex fetch limited, as the vertex engines wait for vertices to be streamed out of card memory and into their input buffers, by the driver which points to locations in card memory for the vertex engines to work on.

So you're not terribly limited by the power of your vertex units, or the number of them you have, as you'd expect. Rather in 3DMark05 you seem to be limited by the performance of the card on the graphics bus (I hinted at that in the first article, but was unsure how to explain it) and the performance of vertex fetch from card memory on your hardware.

Does that make it a less-useful, forward-looking benchmark? Unless future games are going to be so massively geometry heavy, and from what I can gather they won't be, 3DMark05 is less of a predictor of actual framerates in games this time around, rather more of a general means to rank hardware from top to bottom at any given point in time, disregarding the actual score that's given. If you've only ever been using 3DMark releases for that anyway, then great!

For my uses at HEXUS where I get to decide whether we use it or not, in its present state with current drivers, it's a decent way to rank hardware in a review and it's also going to be interesting to see how drivers, if they can at all, will try and alleviate the bottlenecks in 05's geometry usage. We'll be using it. It's popular and everyone wants us to run it, and it does rank hardware pretty well using the basic test.

And then there's the feature tests.