How we test

| GPGPU testing setup | |

|---|---|

| Motherboard | Foxconn Bloodrage X58 |

| Processor | Intel Core i7 965 Extreme Edition. 3.2GHz |

| Memory | 6GB (3 x 2GB) Corsair DOMINATOR PC-12800 |

| Memory timings and speed | 9-9-9-24-1T @ 1,333MHz |

| Graphic cards | HIS Radeon HD 5870 1,024MB and BFG GeForce GTX 295 1,792MB |

| Power supply | Corsair HX1000W |

| Hard drive | Seagate Barracuda 7200.12, 1TB |

| Optical drive | Sony SATA DVD-RW |

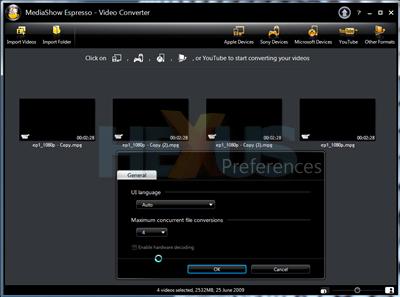

The application can’t utilise more than four threads per video encode, so four copies of the same input file were queued up, enabling the Intel Core i7 965 CPU cores to be fully utilised.

In order to ensure the encoding takes place simultaneously, the maximum concurrent file conversions were set to four.

On the ATI testbed one can also enable hardware decoding, however this seemed to cap the performance, thus making it slower than CPU-only encoding. Appreciating this, we left it disabled.

The input file used was of the following specification: Video – 2 minutes 28 seconds, 1,920x1,080 MPEG-2 HD, 35Mbps bit-rate, 25fps frame-rate, progressive-scan. Audio - stereo, MPEG-1 Layer II, 48KHz, 384Kbps.

The output file used the default parameters for the YouTube profile as follows: Video – 2 minutes 28 seconds, 1,280x720, H.264, 1.93Mbps bit-rate, 29fps frame-rate, progressive-scan. Audio – stereo, AAC-LC, 44KHz, 220Kbps.

The screenshot, below, highlights which settings were selected in the application.

Despite CyberLink reporting to the contrary on its website, the level of customisation on offer is extremely limited. For example, there is no adjustment of the audio output parameters other than the format used. In addition you can only choose a very limited selection of output resolutions, not helped by the lack of bit-rate or frame-rate adjustment. As such the output frame-rate on both NVIDIA and AMD systems is incorrect. It spits out 29fps whereas it should have been 25fps.