SLI disadvantages

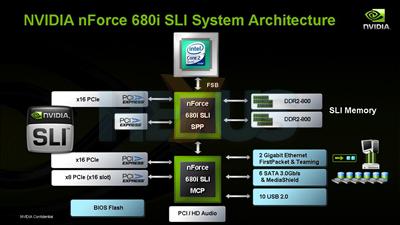

Since our last test, we've discovered a few more things about the Nvidia nForce 680a SLI chipset and how it differs from the nForce 680i SLI equivalent aimed at Intel processors. Our discoveries have little bearing on the megatasking benchmarks later in this article, but they are very important so we're including them here for your general edification. In a nutshell, SLI does not work in quite the same way on Quad FX as it does on the equivalent Intel platform. This means that configuring your SLI incorrectly can lead to serious performance implications.This is Nvidia's schematic for its nForce 680i SLI chipset. As would seem logical, you put your two graphics cards in the two 16x PCI Express slots. Nvidia makes this very clear by overlapping these two with its SLI logo. Although these two slots are running off different physical chips - one from the SPP, one from the MCP - these are directly connected to each other via a HyperTransport link. The SPP takes the primary role, as this is the part of the chipset directly connected to the processor. This configuration is the same for previous nForce chipsets offering a pair of 16x slots with full 16x performance, and indeed the AMD-oriented equivalents. With the latter, there is no FSB as the CPU is connected to the first chip via HyperTransport. But the second chip is still daisy-chained off the first by another HyperTransport connection.

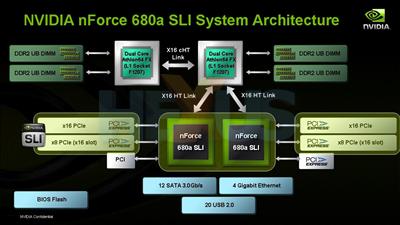

With nForce 680a SLI, the diagram may be arranged differently, but the SLI setup looks basically the same. There are two MCPs, and both have two 16x slots, with one running at 16x electrically and another at 8x each. It would seem obvious to proceed as before and stick a graphics card in each full-speed 16x slot. This is indeed the configuration which AMD sent us for review.

But there's a catch. Look closely at Nvidia's schematic and note where the SLI logo is placed. It overlaps a 16x and a 8x (electrical) slot coming off the same nForce 680a MCP. This is not for artistic reasons. Take another look at the diagram, and you will see there is no direct HyperTransport link between the two MCPs. If they want to communicate with each other, they must go via their HyperTransport links to the primary processor, which must act as the middle man. So it's not exactly hard to see why trying to run SLI across cards connected to different MCPs is not going to give you the best performance. The necessity of talking via the primary CPU adds too much latency.

To cut a long story short(er), running SLI on Quad FX means having one card at 16x and one at 8x off the primary MCP, whereas the equivalent Intel platform will give you SLI on two full-speed 16x connections. In practice, we haven't seen enormous differences when there's a single GPU on each slot. But things could be rather different with dual-GPU cards. It really is no wonder that Nvidia talks about being able to run multiple graphics cards to drive lots of monitors - not running quad-card SLI.