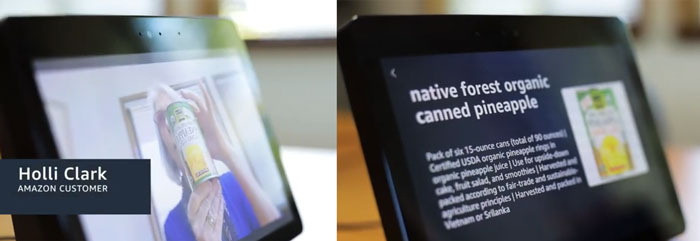

Amazon has introduced a new Alexa Skill for users of its Echo Show devices. The new 'Show and Tell' feature will be of greatest utility to those with visual impairment. It works just like it sounds: simply hold a bottle or can from your pantry in front of the Echo Show and ask "Alexa, what am I holding?" or "Alexa, what's in my hand?" and you should get an accurate result thanks to Amazon's advanced computer vision and machine learning tech.

Show and Tell was developed with input from engineer Stacie Grijalva , who suddenly lost her sight aged 41. She became committed to exploring how assistive technology could make life a little easier for the blind and visually impaired, and sharing that information with others, says the Amazon blog. Grijalva was already a big user of Amazon home tech such as the Fire TV and smart home gadgets so it was natural for her to work within this ecosystem.

Recognising items by feel only goes so far, for example a humble bottle of soy sauce might be the same shape and size as a weapons grade ghost chilli concoction – and canned foods are even more uniform in size and shape. "It's a tremendous help and a huge time saver because the Echo Show just sits on my counter, and I don’t have to go and find another tool or person to help me identify something. I can do it on my own by just asking Alexa," Grijalva said. Other testers and users of Show and Tell describe it as a game changer, and life improver.

Show and Tell is available right away in the US for users of first and second-generation Echo Show devices.