The magic inside

The parallel processing power of G80 opened up avenues other than just gaming under DirectX and OpenGL APIs.In February 2007, NVIDIA introduced its CUDA (Compute Unified Device Architecture) programming language, based on commonly-used C, that offered developers the ability to code applications that took advantage of the stream-processing capability of G80-based GPUs. This, potentially, was significantly higher than that of any quad-core CPUs.

With Intel snapping up Havok and ATI announcing support for it just last week, NVIDIA's purchase of AGEIA - the pioneer of PhysX technology that's used for accurate simulation of in-game physics - has seen fruition with the API now ported over, via CUDA, to GeForce 8-series and 9-series (CUDA-enabled) GPUs. NVIDIA says it will be releasing PhysX drivers in the near future.

More than just a GPU

Put simply, any new iteration of GPU from NVIDIA clearly needs to be more than just a pure gaming card, because the market is such that the flexible, general-purpose nature of the powerful architecture(s) can be leveraged in eclectic ways, from speeding-up multimedia-oriented applications to providing massively-parallel processing power for a myriad of professional programs.The two leading GPU makers - NVIDIA and ATI (part of AMD, of course) - are adamant that the growth in GPGPU (general purpose processing on the GPU) computing will drive the need for faster cards, and we'll begin to see the benefits of consumer-focused GPGPU with optimised video encoders that work at a fundamentally quicker rate than on the CPU alone.

Following on from this, NVIDIA refers to the GTX 280 as both a gaming card and a parallel computing device (via CUDA-written apps), intimating that its general-purpose nature can be leveraged in more than the traditional manner.

What's in a name?

Two new GPUs will be released by NVIDIA this month - GeForce GTX 280, followed by GTX 260. These GPUs mark a break with the current naming system and that is kind of confusing for users not savvy with what's new and what's not.GeForce GTX 280: G80's bigger brother

Pushing existing architectures to the limit, NVIDIA and ATI had little choice than to opt for multi-GPU packages on single cards, with the GeForce 9800 GX2 and Radeon HD 3870 X2 SKUs.Faced with the G80 core topping out in performance terms, NVIDIA could have debuted GeForce GTX 280 as another multi-chip card, using a more-efficient base and boosting overall throughput by, say, 20 per cent over incumbent high-end solutions.

Using such an approach - kind of like GeForce 9800 GX2 - would have been a short-term measure, because any new design needs to be competitive for 18 months or so, with clock-speeds - core, memory, shader - being increased as yields improve and smaller manufacturing processes are introduced.

NVIDIA, though, has opted to introduce GeForce GTX 280 as a single-GPU design. Reading between the lines, GTX 280, by definition, will be a big, big chip imbued with lots of memory bandwidth and a healthy frame-buffer, thereby taking the best bits of G80 and making it better.

Before jumping ship into Techville, one feature is kind of conspicuous by its absence. Microsoft has now rolled into Vista's SP1 the incremental upgrade that is DX10.1. ATI jumped the gun by introducing it into the Radeon HD 3000-series of GPUs. NVIDIA, though, has skipped it completely with the GeForce GTX 200-series.

DX10.1 brings more-efficient 3D rendering to the table, with particular benefits to antialiasing. Is NVIDIA wrong in not implementing it? There are no current games that support it - and Assassin's Creed even had it removed with the v1.02 patch - but we're still somewhat surprised by the omission.

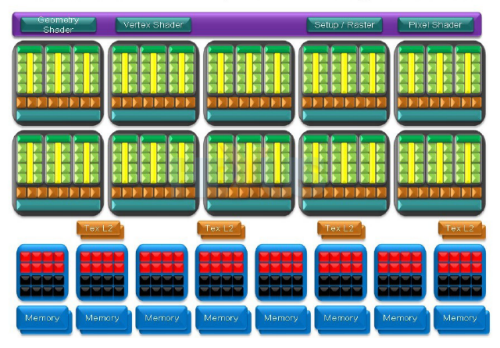

Gird your mental loins now as we take a look at what makes the GeForce GTX 280 tick along. Block-diagram time!

We'll talk about GeForce GTX 280 and then comment on how NVIDIA is differentiating the cheaper variant, GTX 260, which is due to be released next week.

Bigger, wider, faster, but cleverer?

Going from the top, GeForce GTX 280's setup is practically identical to G80's.

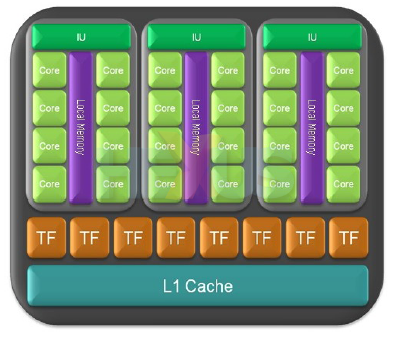

Perusing the meat of the architecture, NVIDIA packs in what it now terms 10 texture-processing clusters, compared with eight in high-end GeForce 8-series and 9-series GPUs. Each cluster is sub-divided into three streaming multiprocessors (up from two with 8-series and 9-series), though each SM has eight processing cores, just like the previous architectures.

Card-wide, that boils down (or up - Ed) to 240 scalar, floating-point stream processors (SPs), clustered in 10 groups of 24. Much like what's gone before - and we'll be saying that a lot - each processor is able to work on a number of threads at one time, where a thread is constituted of either pixels, vertices or geometry data.

The flexible nature of the design means that any thread can be executed on any stream processor, hence the non-fixed, unified nature of GTX 200.

Thread execution

Having the processors filled to the brim with threads is important in a massively parallel architecture - to minimise latency. And every group of streaming multiprocessors - each containing eight stream processors, remember - can process 32 batches of threads, where each batch itself is made up of 32 threads.

The upshot is that each cluster, as shown above, can manage 3,072 threads (three SMs x 32 batches x 32 threads per batch). Given 10 clusters, GTX 280 can have up to 30,720 threads in flight at once. Put it another way, each of the 240 stream processors can handle 128 threads apiece.

In comparison, a GeForce 9800 GTX, which is NVIDIA's preferred single-GPU standard-bearer, carries eight clusters with two SMs per cluster. Each GeForce 9800 GTX SM handles 24 batches of 32 threads, meaning a total per-cluster count of 2 x 768 (1,536) and a total GPU-wide count of 12,288 (8 x 1,536), or 96 per stream processor.

Put simply, more threads means more potential processing and, hopefully, less GPU latency.