Just a phase

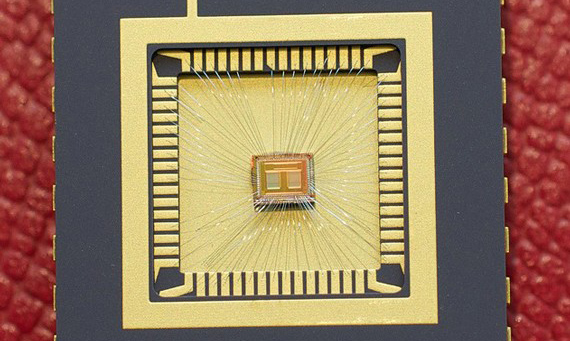

IBM has achieved what it calls a memory breakthrough, having created stable multi-bit phase-change memory - the first time this has been achieved for phase-change memory. 100 times faster than flash, PCM certainly has its appeal, although commercial implementations are a way off, yet.

Phase-change memory has been under development for decades, but IBM's experiment is the first time that stable storage of more than a single bit per cell has been achieved. Like MLC Flash chips, multi-bit PCM is able to store two bits per cell (the combinations 00, 01, 10 and 11), giving a sizeable improvement in memory density.

Where phase-change memory improves upon Flash is its speed and durability. IBMs testing saw a 'worst-case' latency of around 10 microseconds, some 100 times faster than flash memory. Where Flash tops out at some 3,000 write cycles per cell at a consumer grade ant 30,000 writes at an enterprise grade, IBM says that PCM is good for over 10 million write cycles, making it a much better fit for long-term enterprise use.

Accoridng to Dr. Haris Pozidis, Manager of Memory and Probe Technologies at IBM Research: "By demonstrating a multi-bit phase-change memory technology which achieves for the first time reliability levels akin to those required for enterprise applications, we made a big step towards enabling practical memory devices based on multi-bit PCM.

Despite having made this 'breakthrough' IBM isn't going to be changing the world any time soon. Not only does the company not plan to create any products itself, but rather will look to license patents to third parties interested in doing so, it also doesn't expect products featuring PCM before 2016, by which time it's possible that innovations in flash will have negated the benefits of PCM altogether.